Package gplately

Version - latest indev

Introduction

TODO

Installation

1. Using conda (recommended)

The latest stable public release of GPlately can be installed using conda from the "conda-forge" channel. The following commands will create a new conda environment called "my-gplately-conda-env" and install GPlately within that environment.

conda create -n my-gplately-conda-env

conda activate my-gplately-conda-env

conda install -c conda-forge gplately

✏️ If conda gets stuck while solving the environment during the installation of GPlately, you can try using micromamba instead.

2. Using pip

GPlately can also be installed using pip.

🟢 Install the latest stable public release from PyPI

pip install gplately

🟢 Install from GitHub repository (if you need the latest code changes on GitHub)

pip install git+https://github.com/GPlates/gplately.git

🟢 Install from a local folder (if you need local code changes)

git clone https://github.com/GPlates/gplately.git gplately.git

cd gplately.git # go into the folder created by "git clone" command

git checkout master # check out the "master" branch or the name of branch you want

git pull # fetch all recent code changes from the GitHub remote repository

# make your local code changes

pip install . # alternatively, you can use "pip install -e ." to install gplately in editable mode

3. Using Docker 🐳

👉 Run GPlately notebooks with Docker

docker pull gplates/gplatelydocker run --rm -ti -p 8888:8888 gplates/gplately- http://localhost:8888

👉 Run GPlately command with Docker

docker run gplates/gplately gplately --versiondocker run gplates/gplately gplately --help

👉 Run your Python script with Docker

docker run -it --rm -v THE_FULL_PATH_TO_YOUR_SCRIPT_FOLDER:/ws -w /ws gplates/gplately python my_script_to_run.py

✏️ Replace THE_FULL_PATH_TO_YOUR_SCRIPT_FOLDER with the full path to the folder containing your script file. In PowerShell, you can use "$PWD" if your script is in the current working directory. On Linux or macOS, you can use `pwd` instead.

Visit this page for more details about using Docker with GPlately.

Minimal working example

- TODO

- Show a basic, functional example with minimal dependencies.

- Keep it simple and easy to understand.

- …should satisfy getting a first user up and running quickly. Where the Quick Start in the GitHub Readme links to the 2nd and 3rd chapters (a quick start does not need to link to the Introduction, the first user is already sufficiently motivated by now).

Can then add more chapters from Dietmar's Quick Start:

- If you prefer using Jupyter Notebook, click here.

- If you prefer using Python script, click here.

Common Use Cases

- This can cover what is currently in our Quick Start.

- Ie, a brief description and code example of each of the main classes (5 or so classes).

PlateModelManager

The PlateModelManager module was introduced as a more efficient alternative to the DataServer class, designed specifically for downloading and managing plate reconstruction model files. More information about the PlateModelManager module can be found in its GitHub repository.

from gplately import (

PlateModelManager,

PlateReconstruction,

PlotTopologies,

PresentDayRasterManager,

Raster,

)

model = PlateModelManager().get_model(

"Muller2019", # model name

data_dir="plate-model-repo", # the folder to save the model files

)

recon_model = PlateReconstruction(

model.get_rotation_model(),

topology_features=model.get_layer("Topologies"),

static_polygons=model.get_layer("StaticPolygons"),

)

gplot = PlotTopologies(

recon_model,

coastlines=model.get_layer("Coastlines"),

COBs=model.get_layer("COBs"),

time=55,

)

# get present-day topography raster

raster = Raster(PresentDayRasterManager().get_raster("topography"))

# get paleo-agegrid raster at 100Ma from Muller2019 model

agegrid = Raster(model.get_raster("AgeGrids", time=100))

For more example code, a comprehensive example on GitHub demonstrates how to use the PlateModelManager module in details. Another example shows how to use the PlateModelManager module with GPlately.

You may use the auxiliary functions to create the PlateReconstruction and PlotTopologies objects.

from gplately.auxiliary import get_gplot, get_plate_reconstruction

# use the auxiliary function to create a PlateReconstruction object

plate_reconstruction_obj = get_plate_reconstruction("Muller2019")

# use the auxiliary function to create a PlotTopologies object

plot_topologies_obj = get_gplot("Muller2019", time=140)

# there is a PlateReconstruction object inside the PlotTopologies object.

# so, in most cases, a single get_gplot() call is enough.

# you can get the PlateReconstruction object from a PlotTopologies object later, for example

another_plate_reconstruction_obj = plot_topologies_instance.plate_reconstruction

DataServer

The DataServer class allows users to automatically download and cache the necessary files for plate reconstructions to a designated folder on your system.

These files include rotation models, topology features, and static geometries such as coastlines, continents, and continent-ocean boundaries.

Additionally, it supports the retrieval of other data types, including rasters, grids, and feature data.

(Use the newer PlateModelManager whenever possible.)

from gplately.download import DataServer

gdownload = DataServer("Muller2019")

# Download plate reconstruction files and geometries from the Müller et al. 2019 model

rotation_model, topology_features, static_polygons = (

gdownload.get_plate_reconstruction_files()

)

coastlines, continents, COBs = gdownload.get_topology_geometries()

# Download the Müller et al. 2019 100 Ma age grid

age_grid = gdownload.get_age_grid(times=100)

# Download the ETOPO1 geotiff raster

etopo = gdownload.get_raster("ETOPO1_tif")

Both PlateModelManager and DataServer support the following plate reconstruction models:

| Model name string Identifier | Zenodo | Topology features | Static polygons | Coast-lines | Cont-inents | COB | Age grids | SR grids |

|---|---|---|---|---|---|---|---|---|

| Alfonso2024 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Cao2024 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ |

| Muller2022 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ |

| Zahirovic2022 | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ✅ | ✅ |

| Merdith2021 | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

| Clennett2020 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ✅ | ✅ |

| Clennett2020_M2019 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ✅ | ✅ |

| Clennett2020_S2013 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Muller2019 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ |

| Young2018 | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

| TorsvikCocks2017 | ❌ | ❌ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Matthews2016 | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

| Matthews2016_pmag_ref | ❌ | ❌ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

| Muller2016 | ✅ | ✅ | ✅ | ✅ | ❌ | ✅ | ✅ | ❌ |

| Scotese2016 | ✅ | ❌ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Zahirovic2016 | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ |

| Gibbons2015 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Zahirovic2014 | ✅ | ❌ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Shephard2013 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Gurnis2012 | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ | ❌ | ❌ |

| Seton2012 | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ❌ |

| Muller2008 | ❌ | ❌ | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

Please note that all models have rotation files. The "Zenodo" column indicates whether the model files are available on Zenodo.

PlateReconstruction

The PlateReconstruction class contains tools to reconstruct geological features like tectonic plates and plate boundaries,

and to interrogate plate kinematic data like plate motion velocities, and rates of subduction and seafloor spreading.

from gplately import PlateReconstruction, PlateModelManager

model = PlateModelManager().get_model("Muller2019")

# Build a plate reconstruction model using a rotation model, a set of topology features and static polygons

recon_model = PlateReconstruction(

model.get_rotation_model(),

topology_features=model.get_layer("Topologies"),

static_polygons=model.get_layer("StaticPolygons"),

)

Alternatively, you may use the auxiliary functions to create a PlateReconstruction instance.

from gplately.auxiliary import get_plate_reconstruction

# use the auxiliary function to create a PlateReconstruction instance

plate_reconstruction_instance = get_plate_reconstruction("Muller2019")

This 02-PlateReconstructions.ipynb demonstrates in details

how to use the PlateReconstruction class.

Points

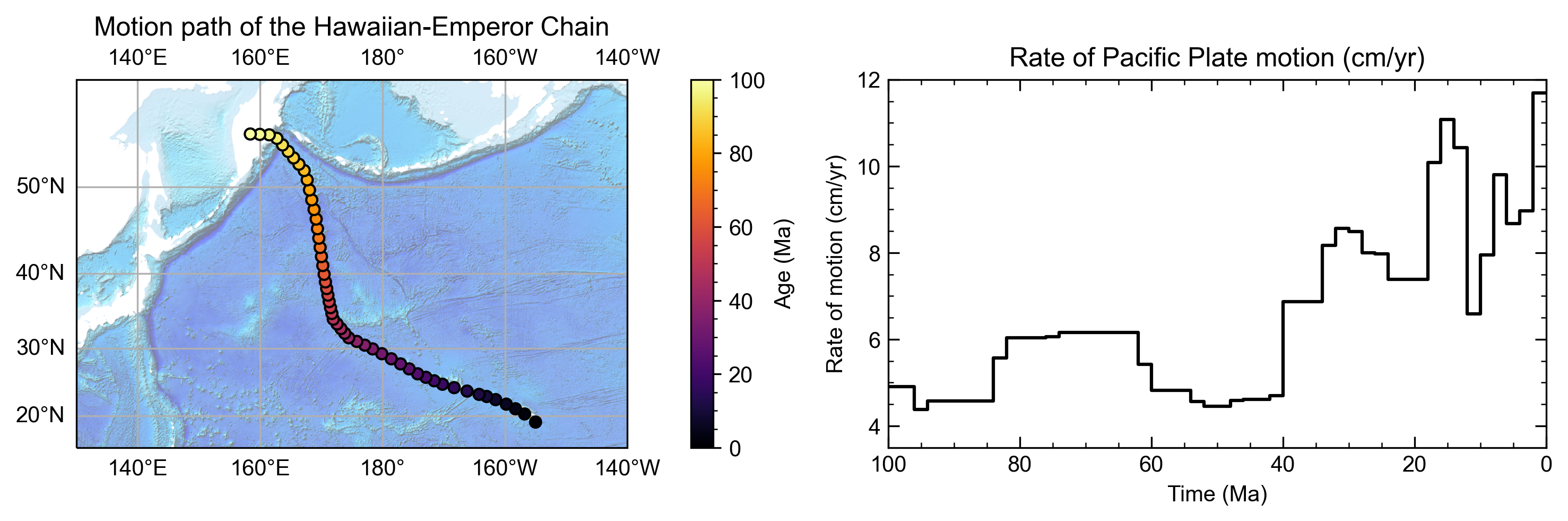

The methods in the Points class track the motion of a point (or group of points) represented by a latitude and longitude

through geologic time. This motion can be visualised using flowlines or motion paths and quantified with point

motion velocities.

import numpy as np

from gplately import PlateModelManager, Points, auxiliary

model = PlateModelManager().get_model("Muller2019")

# Create a plate reconstruction model using a rotation model, a set of topology features and static polygons

recon_model = auxiliary.get_plate_reconstruction(model)

# Define some points using their latitude and longitude coordinates so we can track them though time!

pt_lons = np.array([140.0, 150.0, 160.0])

pt_lats = np.array([-30.0, -40.0, -50.0])

# Create a Points instance from these points

gpts = Points(recon_model, pt_lons, pt_lats)

The 03-WorkingWithPoints.ipynb demonstrates in details

how to use the Points class.

The 09-CreatingMotionPathsAndFlowlines.ipynb demonstrates how to create motion paths and flowlines.

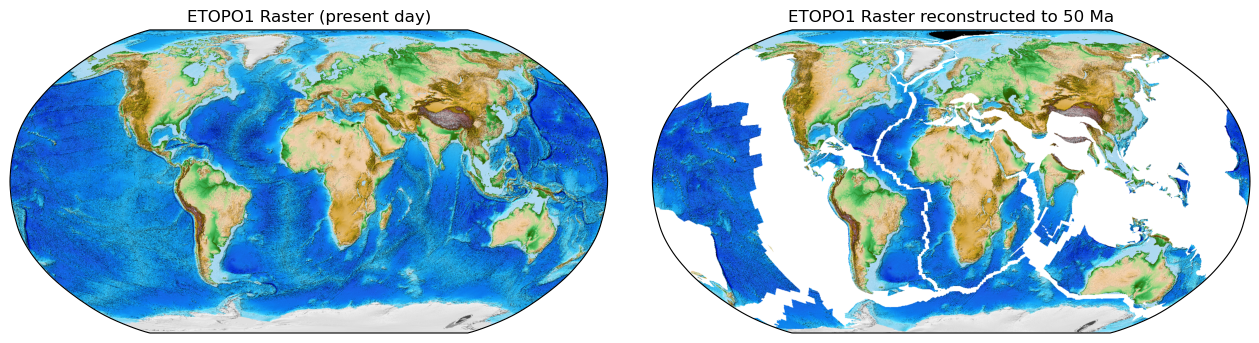

Raster

The Raster class contains methods to work with netCDF4 or MaskedArray gridded data. Grids may be filled,

resized, resampled, and reconstructed back and forwards through geologic time. Other array data can also be

interpolated onto Raster grids.

from gplately import PlateModelManager, PresentDayRasterManager, Raster, auxiliary

model_name = "Muller2019"

# Create a plate reconstruction model using a rotation model, a set of topology features and static polygons

recon_model = auxiliary.get_plate_reconstruction(model_name)

# Any numpy array can be turned into a Raster object!

raster = Raster(

plate_reconstruction=recon_model,

data=PresentDayRasterManager().get_raster("topography"),

extent="global", # equivalent to (-180, 180, -90, 90)

origin="lower", # or set extent to (-180, 180, -90, 90)

)

# Reconstruct the raster data to 50 million years ago!

reconstructed_raster = raster.reconstruct(

time=50,

partitioning_features=PlateModelManager()

.get_model(model_name)

.get_layer("ContinentalPolygons"),

)

The 06-Rasters.ipynb demonstrates in details

how to use the Raster class.

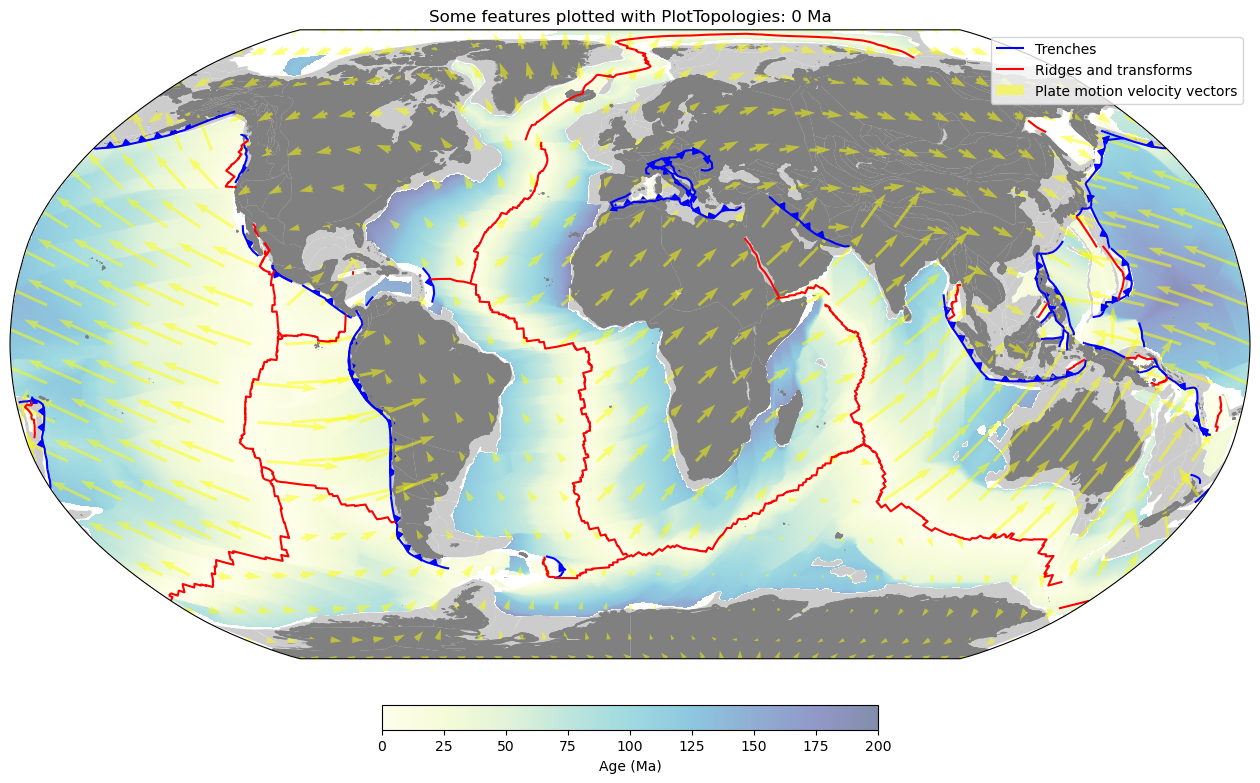

PlotTopologies

The PlotTopologies class works with the aforementioned PlateReconstruction class to plot

geologic features of different types listed

here, as well as

coastline, continent and continent-ocean boundary geometries reconstructed through time using pyGPlates.

from gplately import PlateModelManager, PlotTopologies, auxiliary

model = PlateModelManager().get_model("Muller2019")

recon_model = auxiliary.get_plate_reconstruction(model)

gplot = PlotTopologies(

recon_model,

coastlines=model.get_layer("Coastlines"),

COBs=model.get_layer("COBs"),

continents=model.get_layer("ContinentalPolygons"),

time=55,

)

You may use the auxiliary functions to create a PlotTopologies object.

from gplately.auxiliary import get_gplot

# use the auxiliary function to create a PlotTopologies object

plot_topologies_obj = get_gplot("Muller2019", time=55)

The 02-PlateReconstructions.ipynb demonstrates in details

how to use the PlotTopologies class.

SeafloorGrid

The SeafloorGrid class wraps an automatic workflow to grid seafloor ages and seafloor spreading rates

as encoded by a plate reconstruction model.

import os

os.environ["DISABLE_GPLATELY_DEV_WARNING"] = "true"

from gplately import SeafloorGrid, auxiliary

if __name__ == "__main__":

gplot = auxiliary.get_gplot("Muller2019")

# Set up automatic gridding from 5Ma to present day

seafloorgrid = SeafloorGrid(

PlateReconstruction_object=gplot.plate_reconstruction, # The PlateReconstruction object

PlotTopologies_object=gplot, # The PlotTopologies object

max_time=5, # start time (Ma)

min_time=0, # end time (Ma)

ridge_time_step=1, # time increment (Myr)

)

# Begin automatic gridding!

seafloorgrid.reconstruct_by_topologies()

The 10-SeafloorGrids.ipynb is a tutorial notebook that demonstrates

how to set up and use the SeafloorGrid object, and shows a sample set of output grids.

Trouble-shooting and FAQ

And then instead of Next Steps & Links we just continue with regular detailed documentation chapters:

Examples

- TODO

- Notebooks

-

Other examples (not notebooks).

-

01 - Getting Started: A brief overview of how to initialise GPlately's main objects

- 02 - Plate Reconstructions: Setting up a

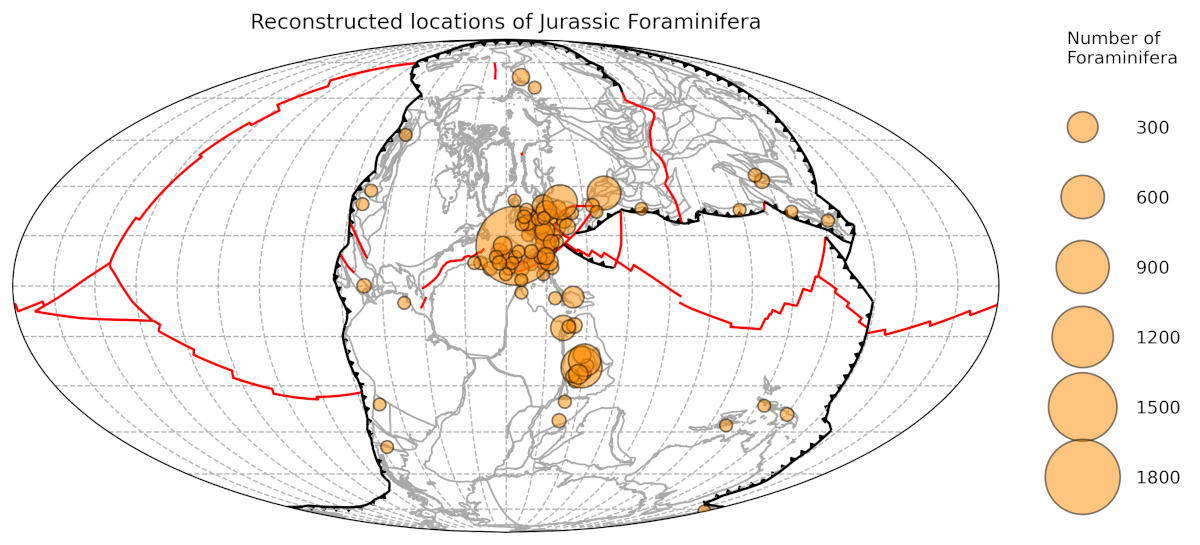

PlateReconstructionobject, reconstructing geological data through time - 03 - Working with Points: Setting up a

Pointsobject, reconstructing seed point locations through time with. This notebook uses point data from the Paleobiology Database (PBDB). - 04 - Velocity Basics: Calculating plate velocities, plotting velocity vector fields

- 05 - Working with Feature Geometries: Processing and plotting assorted polyline, polygon and point data from GPlates 2.3's sample data sets

- 06 - Rasters: Reading, resizing, resampling raster data, and linearly interpolating point data onto raster data

- 07 - Plate Tectonic Stats: Calculating and plotting subduction zone and ridge data (convergence/spreading velocities, subduction angles, subduction zone and ridge lengths, crustal surface areas produced and subducted etc.)

- 08 - Predicting Slab Flux: Predicting the average slab dip angle of subducting oceanic lithosphere.

- 09 - Motion Paths and Flowlines: Using pyGPlates to create motion paths and flowines of points on a tectonic plate to illustrate the plate's trajectory through geological time.

- 10 - SeafloorGrid: Defines the parameters needed to set up a

SeafloorGridobject, and demonstrates how to produce age and spreading rate grids from a set of plate reconstruction model files. - 11 - AndesFluxes: Demonstrates how the reconstructed subduction history along the Andean margin can be potentially used in the plate kinematics analysis and data mining.

In addition to the notebooks above, a variety of examples are available to help you get started with GPlately. Visit this page for more details.

Command-line interface

- TODO

- Maybe goes through each command with an example.

- Could possibly be merged into another chapter.

GPlately comes with a collection of useful command-line tools, each designed as a subcommand of GPlately.

For example, the command gplately list shows a list of available reconstruction models.

To view all the available tools, simply run gplately -h. For a detailed list of the tools along with usage examples,

visit this page.

Primer

- TODO

- This is like the Reference Manual mentioned below.

API Reference

- TODO

- This is the main part of GPlately.

- It's covered very well.

Sub-modules

gplately.auxiliarygplately.geometry-

This sub-module contains tools for converting PyGPlates or GPlately geometries to Shapely geometries for mapping (and vice versa) …

gplately.gpml-

This sub-module contains functions for manipulating GPML (

.gplately.gpml,.gpmlz) files, as well aspygplates.Featureandpygplates.FeatureCollection… gplately.grids-

This sub-module contains tools for working with MaskedArray, ndarray and netCDF4 rasters, as well as gridded-data …

gplately.oceans-

A module to generate grids of seafloor age, seafloor spreading rate and other oceanic data from the

PlateReconstructionand … gplately.parallel-

This sub-module contains tools for efficiently executing routines by parallelizing them across multiple threads, utilizing multiple processing units."

gplately.plot-

This sub-module contains tools for reconstructing and plotting geological features and feature data through time …

gplately.ptt-

"ptt" stands for Plate Tectonics Tools …

gplately.reconstruction-

This sub-module contains tools that wrap up pyGPlates and Plate Tectonic Tools functionalities for reconstructing features, working with point data, …

gplately.spatial-

This sub-module contains spatial tools for calculating distances on the Earth.

gplately.tools-

A module that offers tools for executing common geological calculations, mathematical conversions and numpy conversions.

gplately.utils

Classes

class DataServer (file_collection, data_dir=None, verbose=True)-

The DataServer class may be deprecated in the future. We recommend using the newer plate-model-manager module whenever possible.

The methods in this DataServer class download plate reconstruction models to the cache folder on your computer from EarthByte's WebDAV server.

If the

DataServerobject and its methods are called for the first time, i.e. by:# string identifier to access the Muller et al. 2019 model gDownload = gplately.download.DataServer("Muller2019")all requested files are downloaded into the user's 'gplately' cache folder only once. If the same object and method(s) are re-run, the files will be re-accessed from the cache provided they have not been moved or deleted.

This page contains a list of available plate reconstruction models. For more information about these plate models, visit this EarthByte web page.

You can also use the

pmm lscommand to retrieve more information about a model. For instance, runningpmm ls cao2024will display details about the "Cao2024" model. Make sure to install theplate-model-managermodule first by runningpip install plate-model-managerbefore executing this command.Parameters

file_collection:str- model name

verbose:bool, default=True- Toggle print messages regarding server/internet connection status, file availability etc.

Expand source code

class DataServer(object): """The DataServer class may be deprecated in the future. We recommend using the newer [plate-model-manager](https://pypi.org/project/plate-model-manager/) module whenever possible. The methods in this DataServer class download plate reconstruction models to the cache folder on your computer from EarthByte's [WebDAV server](https://repo.gplates.org/webdav/pmm/). If the `DataServer` object and its methods are called for the first time, i.e. by: # string identifier to access the Muller et al. 2019 model gDownload = gplately.download.DataServer("Muller2019") all requested files are downloaded into the user's 'gplately' cache folder only _once_. If the same object and method(s) are re-run, the files will be re-accessed from the cache provided they have not been moved or deleted. [This page](https://gplates.github.io/gplately/dev-doc/#dataserver) contains a list of available plate reconstruction models. For more information about these plate models, visit this [EarthByte web page](https://www.earthbyte.org/category/resources/data-models/global-regional-plate-motion-models/). You can also use the `pmm ls` command to retrieve more information about a model. For instance, running `pmm ls cao2024` will display details about the "Cao2024" model. Make sure to install the `plate-model-manager` module first by running `pip install plate-model-manager` before executing this command. """ def __init__(self, file_collection, data_dir=None, verbose=True): """ Parameters ---------- file_collection: str model name verbose: bool, default=True Toggle print messages regarding server/internet connection status, file availability etc. """ if not data_dir: _data_dir = path_to_cache() else: _data_dir = data_dir self.file_collection = file_collection.capitalize() self.pmm = PlateModelManager().get_model( self.file_collection, data_dir=str(_data_dir) ) if not self.pmm: raise Exception( f"Unable to get plate model {self.file_collection}. Check if the model name is correct." ) self._available_layers = self.pmm.get_avail_layers() self.verbose = verbose # initialise empty attributes self._rotation_model = None self._topology_features = None self._static_polygons = None self._coastlines = None self._continents = None self._COBs = None def _create_feature_collection(self, file_list): feature_collection = _pygplates.FeatureCollection() for feature in file_list: feature_collection.add(_pygplates.FeatureCollection(feature)) return feature_collection @property def rotation_model(self): if self._rotation_model is None and self.pmm: self._rotation_model = _pygplates.RotationModel( self.pmm.get_rotation_model() ) self._rotation_model.reconstruction_identifier = self.file_collection return self._rotation_model @property def topology_features(self): if self._topology_features is None and self.pmm: if "Topologies" in self._available_layers: self._topology_features = self._create_feature_collection( self.pmm.get_topologies() ) else: self._topology_features = [] return self._topology_features @property def static_polygons(self): if self._static_polygons is None and self.pmm: if "StaticPolygons" in self._available_layers: self._static_polygons = self._create_feature_collection( self.pmm.get_static_polygons() ) else: self._static_polygons = [] return self._static_polygons @property def coastlines(self): if self._coastlines is None and self.pmm: if "Coastlines" in self._available_layers: self._coastlines = self._create_feature_collection( self.pmm.get_coastlines() ) else: self._coastlines = [] return self._coastlines @property def continents(self): if self._continents is None and self.pmm: if "ContinentalPolygons" in self._available_layers: self._continents = self._create_feature_collection( self.pmm.get_continental_polygons() ) else: self._continents = [] return self._continents @property def COBs(self): if self._COBs is None and self.pmm: if "COBs" in self._available_layers: self._COBs = self._create_feature_collection(self.pmm.get_COBs()) else: self._COBs = [] return self._COBs @property def from_age(self): if self.pmm: return self.pmm.get_big_time() @property def to_age(self): if self.pmm: return self.pmm.get_small_time() @property def time_range(self): return self.from_age, self.to_age @property def valid_times(self): return self.from_age, self.to_age def get_plate_reconstruction_files(self): """Downloads and constructs a `rotation model`, a set of `topology features` and and a set of `static polygons`. These objects can then be used to create `PlateReconstruction` object. Returns ------- rotation_model : instance of <pygplates.RotationModel> A rotation model to query equivalent and/or relative topological plate rotations from a time in the past relative to another time in the past or to present day. topology_features : instance of <pygplates.FeatureCollection> Point, polyline and/or polygon feature data that are reconstructable through geological time. static_polygons : instance of <pygplates.FeatureCollection> Present-day polygons whose shapes do not change through geological time. They are used to cookie-cut dynamic polygons into identifiable topological plates (assigned an ID) according to their present-day locations. Notes ----- The get_plate_reconstruction_files() method downloads reconstruction files from a given plate model. For example, gDownload = gplately.download.DataServer("Muller2019") rotation_model, topology_features, static_polygons = gDownload.get_plate_reconstruction_files() The code above downloads `rotation model`, `topology features` and `static polygons` files from the Müller et al. (2019) plate reconstruction model. These files can then be used to create `PlateReconstruction` object. model = gplately.reconstruction.PlateReconstruction(rotation_model, topology_features, static_polygons) If the requested plate model does not have certain file(s), a warning message will alert user of the missing file(s). """ return self.rotation_model, self.topology_features, self.static_polygons def get_topology_geometries(self): """Uses the [plate-model-manager](https://pypi.org/project/plate-model-manager/) to download coastline, continent and COB (continent-ocean boundary) Shapely geometries from the requested plate model. These are needed to call the `PlotTopologies` object and visualise topological plates through time. Parameters ---------- verbose : bool, default True Toggle print messages regarding server/internet connection status, file availability etc. Returns ------- coastlines : instance of <pygplates.FeatureCollection> Present-day global coastline Shapely polylines cookie-cut using static polygons. Ready for reconstruction to a particular geological time and for plotting. continents : instance of <pygplates.FeatureCollection> Cookie-cutting Shapely polygons for non-oceanic regions (continents, inta-oceanic arcs, etc.) ready for reconstruction to a particular geological time and for plotting. COBs : instance of <pygplates.FeatureCollection> Shapely polylines resolved from .shp and/or .gpml topology files that represent the locations of the boundaries between oceanic and continental crust. Ready for reconstruction to a particular geological time and for plotting. Notes ----- This method accesses the plate reconstruction model ascribed to the `file_collection` string passed into the `DataServer` object. For example, if the object was called with `"Muller2019"`: gDownload = gplately.download.DataServer("Muller2019") coastlines, continents, COBs = gDownload.get_topology_geometries() the method will attempt to download `coastlines`, `continents` and `COBs` from the Müller et al. (2019) plate reconstruction model. If found, these files are returned as individual pyGPlates Feature Collections. They can be passed into: gPlot = gplately.plot.PlotTopologies(gplately.reconstruction.PlateReconstruction, time, continents, coastlines, COBs) to reconstruct features to a certain geological time. The `PlotTopologies` object provides simple methods to plot these geometries along with trenches, ridges and transforms (see documentation for more info). Note that the `PlateReconstruction` object is a parameter. * Note: If the requested plate model does not have a certain geometry, a message will be printed to alert the user. For example, if `get_topology_geometries()` is used with the `"Matthews2016"` plate model, the workflow will print the following message: No continent-ocean boundaries in Matthews2016. """ return self.coastlines, self.continents, self.COBs def get_age_grid(self, times): """Downloads seafloor and paleo-age grids from the plate reconstruction model (`file_collection`) passed into the `DataServer` object. Stores grids in the "gplately" cache. Currently, `DataServer` supports the following age grids: * __Muller et al. 2019__ * `file_collection` = `Muller2019` * Time range: 0-250 Ma * Seafloor age grid rasters in netCDF format. * __Muller et al. 2016__ * `file_collection` = `Muller2016` * Time range: 0-240 Ma * Seafloor age grid rasters in netCDF format. * __Seton et al. 2012__ * `file_collection` = `Seton2012` * Time range: 0-200 Ma * Paleo-age grid rasters in netCDF format. Parameters ---------- times : int, or list of int, default=None Request an age grid from one (an integer) or multiple reconstruction times (a list of integers). Returns ------- a gplately.Raster object A gplately.Raster object containing the age grid. The age grid data can be extracted into a numpy ndarray or MaskedArray by appending `.data` to the variable assigned to `get_age_grid()`. For example: gdownload = gplately.DataServer("Muller2019") graster = gdownload.get_age_grid(time=100) graster_data = graster.data where `graster_data` is a numpy ndarray. Raises ----- ValueError If `time` (a single integer, or a list of integers representing reconstruction times to extract the age grids from) is not passed. Notes ----- The first time that `get_age_grid` is called for a specific time(s), the age grid(s) will be downloaded into the GPlately cache once. Upon successive calls of `get_age_grid` for the same reconstruction time(s), the age grids will not be re-downloaded; rather, they are re-accessed from the same cache provided the age grid(s) have not been moved or deleted. Examples -------- if the `DataServer` object was called with the `Muller2019` `file_collection` string: gDownload = gplately.download.DataServer("Muller2019") `get_age_grid` will download seafloor age grids from the Müller et al. (2019) plate reconstruction model for the geological time(s) requested in the `time` parameter. If found, these age grids are returned as masked arrays. For example, to download Müller et al. (2019) seafloor age grids for 0Ma, 1Ma and 100 Ma: age_grids = gDownload.get_age_grid([0, 1, 100]) """ if not self.pmm: raise Exception("The plate model object is None. Unable to get agegrid.") if "AgeGrids" not in self.pmm.get_cfg()["TimeDepRasters"]: raise ValueError( "AgeGrids are not currently available for {}".format( self.file_collection ) ) age_grids = [] time_array = np.atleast_1d(times) if time_array.min() < self.to_age or time_array.max() > self.from_age: raise ValueError("Specify a time range between {}".format(self.time_range)) for ti in time_array: agegrid_filename = self.pmm.get_raster("AgeGrids", ti) agegrid = _gplately.grids.Raster(data=agegrid_filename) age_grids.append(agegrid) if len(age_grids) == 1: return age_grids[0] else: return age_grids def get_spreading_rate_grid(self, times): """Downloads seafloor spreading rate grids from the plate reconstruction model (`file_collection`) passed into the `DataServer` object. Stores grids in the "gplately" cache. Currently, `DataServer` supports spreading rate grids from the following plate models: * __Clennett et al. 2020__ * `file_collection` = `Clennett2020` * Time range: 0-250 Ma * Seafloor spreading rate grids in netCDF format. Parameters ---------- time : int, or list of int, default=None Request a spreading grid from one (an integer) or multiple reconstruction times (a list of integers). Returns ------- a gplately.Raster object A gplately.Raster object containing the spreading rate grid. The spreading rate grid data can be extracted into a numpy ndarray or MaskedArray by appending `.data` to the variable assigned to `get_spreading_rate_grid()`. For example: gdownload = gplately.DataServer("Clennett2020") graster = gdownload.get_spreading_rate_grid(time=100) graster_data = graster.data where `graster_data` is a numpy ndarray. Raises ----- ValueError If `time` (a single integer, or a list of integers representing reconstruction times to extract the spreading rate grids from) is not passed. Notes ----- The first time that `get_spreading_rate_grid` is called for a specific time(s), the spreading rate grid(s) will be downloaded into the GPlately cache once. Upon successive calls of `get_spreading_rate_grid` for the same reconstruction time(s), the grids will not be re-downloaded; rather, they are re-accessed from the same cache location provided they have not been moved or deleted. Examples -------- if the `DataServer` object was called with the `Clennett2020` `file_collection` string: gDownload = gplately.download.DataServer("Clennett2020") `get_spreading_rate_grid` will download seafloor spreading rate grids from the Clennett et al. (2020) plate reconstruction model for the geological time(s) requested in the `time` parameter. When found, these spreading rate grids are returned as masked arrays. For example, to download Clennett et al. (2020) seafloor spreading rate grids for 0Ma, 1Ma and 100 Ma as MaskedArray objects: spreading_rate_grids = gDownload.get_spreading_rate_grid([0, 1, 100]) """ if not self.pmm: raise Exception( "The plate model object is None. Unable to get spreading rate grids." ) if "SpreadingRateGrids" not in self.pmm.get_cfg()["TimeDepRasters"]: raise ValueError( "SpreadingRateGrids are not currently available for {}".format( self.file_collection ) ) spread_grids = [] time_array = np.atleast_1d(times) if time_array.min() < self.to_age or time_array.max() > self.from_age: raise ValueError("Specify a time range between {}".format(self.time_range)) for ti in time_array: spreadgrid_filename = self.pmm.get_raster("SpreadingRateGrids", ti) spreadgrid = _gplately.grids.Raster(data=spreadgrid_filename) spread_grids.append(spreadgrid) if len(spread_grids) == 1: return spread_grids[0] else: return spread_grids def get_valid_times(self): """Returns a tuple of the valid plate model time range, (max_time, min_time).""" return self.from_age, self.to_age def get_raster(self, raster_id_string=None): """Downloads assorted raster data that are not associated with the plate reconstruction models supported by GPlately's `DataServer`. Stores rasters in the "gplately" cache. Currently, `DataServer` supports the following rasters and images: * __[ETOPO1](https://www.ngdc.noaa.gov/mgg/global/)__: * Filetypes available : TIF, netCDF (GRD) * `raster_id_string` = `"ETOPO1_grd"`, `"ETOPO1_tif"` (depending on the requested format) * A 1-arc minute global relief model combining lang topography and ocean bathymetry. * Citation: doi:10.7289/V5C8276M Parameters ---------- raster_id_string : str, default=None A string to identify which raster to download. Returns ------- a gplately.Raster object A gplately.Raster object containing the raster data. The gridded data can be extracted into a numpy ndarray or MaskedArray by appending `.data` to the variable assigned to `get_raster()`. For example: gdownload = gplately.DataServer("Muller2019") graster = gdownload.get_raster(raster_id_string, verbose) graster_data = graster.data where `graster_data` is a numpy ndarray. This array can be visualised using `matplotlib.pyplot.imshow` on a `cartopy.mpl.GeoAxis` GeoAxesSubplot (see example below). Raises ------ ValueError * if a `raster_id_string` is not supplied. Notes ----- Rasters obtained by this method are (so far) only reconstructed to present-day. Examples -------- To download ETOPO1 and plot it on a Mollweide projection: import gplately import numpy as np import matplotlib.pyplot as plt import cartopy.crs as ccrs gdownload = gplately.DataServer("Muller2019") etopo1 = gdownload.get_raster("ETOPO1_tif") fig = plt.figure(figsize=(18,14), dpi=300) ax = fig.add_subplot(111, projection=ccrs.Mollweide(central_longitude = -150)) ax2.imshow(etopo1, extent=[-180,180,-90,90], transform=ccrs.PlateCarree()) """ if raster_id_string: raster_path = PresentDayRasterManager().get_raster(raster_id_string) if raster_path.endswith(".grd") or raster_path.endswith(".nc"): raster = _gplately.grids.Raster(data=raster_path) # Otherwise, the raster is an image; use imread to process else: from matplotlib import image raster_matrix = image.imread(raster_path) raster = _gplately.grids.Raster(data=raster_matrix) if raster_id_string.lower() == "etopo1_tif": raster.lats = raster.lats[::-1] if raster_id_string.lower() == "etopo1_grd": raster._data = raster._data.astype(float) # type: ignore return raster def get_feature_data(self, feature_data_id_string=None): """Downloads assorted geological feature data from web servers (i.e. [GPlates 2.3 sample data](https://www.earthbyte.org/gplates-2-3-software-and-data-sets/)) into the "gplately" cache. Currently, `DataServer` supports the following feature data: * __Large igneous provinces from Johansson et al. (2018)__ Information ----------- * Formats: .gpmlz * `feature_data_id_string` = `Johansson2018` Citations --------- * Johansson, L., Zahirovic, S., and Müller, R. D., In Prep, The interplay between the eruption and weathering of Large Igneous Provinces and the deep-time carbon cycle: Geophysical Research Letters. - __Large igneous province products interpreted as plume products from Whittaker et al. (2015)__. Information ----------- * Formats: .gpmlz, .shp * `feature_data_id_string` = `Whittaker2015` Citations --------- * Whittaker, J. M., Afonso, J. C., Masterton, S., Müller, R. D., Wessel, P., Williams, S. E., & Seton, M. (2015). Long-term interaction between mid-ocean ridges and mantle plumes. Nature Geoscience, 8(6), 479-483. doi:10.1038/ngeo2437. - __Seafloor tectonic fabric (fracture zones, discordant zones, V-shaped structures, unclassified V-anomalies, propagating ridge lineations and extinct ridges) from Matthews et al. (2011)__ Information ----------- * Formats: .gpml * `feature_data_id_string` = `SeafloorFabric` Citations --------- * Matthews, K.J., Müller, R.D., Wessel, P. and Whittaker, J.M., 2011. The tectonic fabric of the ocean basins. Journal of Geophysical Research, 116(B12): B12109, DOI: 10.1029/2011JB008413. - __Present day surface hotspot/plume locations from Whittaker et al. (2013)__ Information ----------- * Formats: .gpmlz * `feature_data_id_string` = `Hotspots` Citation -------- * Whittaker, J., Afonso, J., Masterton, S., Müller, R., Wessel, P., Williams, S., and Seton, M., 2015, Long-term interaction between mid-ocean ridges and mantle plumes: Nature Geoscience, v. 8, no. 6, p. 479-483, doi:10.1038/ngeo2437. Parameters ---------- feature_data_id_string : str, default=None A string to identify which feature data to download to the cache (see list of supported feature data above). Returns ------- feature_data_filenames : instance of <pygplates.FeatureCollection>, or list of instance <pygplates.FeatureCollection> If a single set of feature data is downloaded, a single pyGPlates `FeatureCollection` object is returned. Otherwise, a list containing multiple pyGPlates `FeatureCollection` objects is returned (like for `SeafloorFabric`). In the latter case, feature reconstruction and plotting may have to be done iteratively. Raises ------ ValueError If a `feature_data_id_string` is not provided. Examples -------- For examples of plotting data downloaded with `get_feature_data`, see GPlately's sample notebook 05 - Working With Feature Geometries [here](https://github.com/GPlates/gplately/blob/master/Notebooks/05-WorkingWithFeatureGeometries.ipynb). """ if feature_data_id_string is None: raise ValueError("Please specify which feature data to fetch.") database = _gplately.data._feature_data() found_collection = False for collection, zip_url in database.items(): if feature_data_id_string.lower() == collection.lower(): found_collection = True feature_data_filenames = _collection_sorter( _collect_file_extension( download_from_web(zip_url[0], self.verbose), [".gpml", ".gpmlz"] ), collection, ) break if found_collection is False: raise ValueError( "{} are not in GPlately's DataServer.".format(feature_data_id_string) ) feat_data = _pygplates.FeatureCollection() if len(feature_data_filenames) == 1: feat_data.add(_pygplates.FeatureCollection(feature_data_filenames[0])) return feat_data else: feat_data = [] for file in feature_data_filenames: feat_data.append(_pygplates.FeatureCollection(file)) return feat_dataInstance variables

prop COBs-

Expand source code

@property def COBs(self): if self._COBs is None and self.pmm: if "COBs" in self._available_layers: self._COBs = self._create_feature_collection(self.pmm.get_COBs()) else: self._COBs = [] return self._COBs prop coastlines-

Expand source code

@property def coastlines(self): if self._coastlines is None and self.pmm: if "Coastlines" in self._available_layers: self._coastlines = self._create_feature_collection( self.pmm.get_coastlines() ) else: self._coastlines = [] return self._coastlines prop continents-

Expand source code

@property def continents(self): if self._continents is None and self.pmm: if "ContinentalPolygons" in self._available_layers: self._continents = self._create_feature_collection( self.pmm.get_continental_polygons() ) else: self._continents = [] return self._continents prop from_age-

Expand source code

@property def from_age(self): if self.pmm: return self.pmm.get_big_time() prop rotation_model-

Expand source code

@property def rotation_model(self): if self._rotation_model is None and self.pmm: self._rotation_model = _pygplates.RotationModel( self.pmm.get_rotation_model() ) self._rotation_model.reconstruction_identifier = self.file_collection return self._rotation_model prop static_polygons-

Expand source code

@property def static_polygons(self): if self._static_polygons is None and self.pmm: if "StaticPolygons" in self._available_layers: self._static_polygons = self._create_feature_collection( self.pmm.get_static_polygons() ) else: self._static_polygons = [] return self._static_polygons prop time_range-

Expand source code

@property def time_range(self): return self.from_age, self.to_age prop to_age-

Expand source code

@property def to_age(self): if self.pmm: return self.pmm.get_small_time() prop topology_features-

Expand source code

@property def topology_features(self): if self._topology_features is None and self.pmm: if "Topologies" in self._available_layers: self._topology_features = self._create_feature_collection( self.pmm.get_topologies() ) else: self._topology_features = [] return self._topology_features prop valid_times-

Expand source code

@property def valid_times(self): return self.from_age, self.to_age

Methods

def get_age_grid(self, times)-

Downloads seafloor and paleo-age grids from the plate reconstruction model (

file_collection) passed into theDataServerobject. Stores grids in the "gplately" cache.Currently,

DataServersupports the following age grids:-

Muller et al. 2019

file_collection=Muller2019- Time range: 0-250 Ma

- Seafloor age grid rasters in netCDF format.

-

Muller et al. 2016

file_collection=Muller2016- Time range: 0-240 Ma

- Seafloor age grid rasters in netCDF format.

-

Seton et al. 2012

file_collection=Seton2012- Time range: 0-200 Ma

- Paleo-age grid rasters in netCDF format.

Parameters

times:int,orlistofint, default=None- Request an age grid from one (an integer) or multiple reconstruction times (a list of integers).

Returns

a Raster object-

A gplately.Raster object containing the age grid. The age grid data can be extracted into a numpy ndarray or MaskedArray by appending

.datato the variable assigned toget_age_grid().For example:

gdownload = gplately.DataServer("Muller2019") graster = gdownload.get_age_grid(time=100) graster_data = graster.datawhere

graster_datais a numpy ndarray.

Raises

ValueError- If

time(a single integer, or a list of integers representing reconstruction times to extract the age grids from) is not passed.

Notes

The first time that

get_age_gridis called for a specific time(s), the age grid(s) will be downloaded into the GPlately cache once. Upon successive calls ofget_age_gridfor the same reconstruction time(s), the age grids will not be re-downloaded; rather, they are re-accessed from the same cache provided the age grid(s) have not been moved or deleted.Examples

if the

DataServerobject was called with theMuller2019file_collectionstring:gDownload = gplately.download.DataServer("Muller2019")get_age_gridwill download seafloor age grids from the Müller et al. (2019) plate reconstruction model for the geological time(s) requested in thetimeparameter. If found, these age grids are returned as masked arrays.For example, to download Müller et al. (2019) seafloor age grids for 0Ma, 1Ma and 100 Ma:

age_grids = gDownload.get_age_grid([0, 1, 100]) -

def get_feature_data(self, feature_data_id_string=None)-

Downloads assorted geological feature data from web servers (i.e. GPlates 2.3 sample data) into the "gplately" cache.

Currently,

DataServersupports the following feature data:-

Large igneous provinces from Johansson et al. (2018)

Information

- Formats: .gpmlz

feature_data_id_string=Johansson2018

Citations

- Johansson, L., Zahirovic, S., and Müller, R. D., In Prep, The interplay between the eruption and weathering of Large Igneous Provinces and the deep-time carbon cycle: Geophysical Research Letters.

-

Large igneous province products interpreted as plume products from Whittaker et al. (2015).

Information

- Formats: .gpmlz, .shp

feature_data_id_string=Whittaker2015

Citations

- Whittaker, J. M., Afonso, J. C., Masterton, S., Müller, R. D., Wessel, P., Williams, S. E., & Seton, M. (2015). Long-term interaction between mid-ocean ridges and mantle plumes. Nature Geoscience, 8(6), 479-483. doi:10.1038/ngeo2437.

-

Seafloor tectonic fabric (fracture zones, discordant zones, V-shaped structures, unclassified V-anomalies, propagating ridge lineations and extinct ridges) from Matthews et al. (2011)

Information

- Formats: .gpml

feature_data_id_string=SeafloorFabric

Citations

- Matthews, K.J., Müller, R.D., Wessel, P. and Whittaker, J.M., 2011. The tectonic fabric of the ocean basins. Journal of Geophysical Research, 116(B12): B12109, DOI: 10.1029/2011JB008413.

-

Present day surface hotspot/plume locations from Whittaker et al. (2013)

Information

- Formats: .gpmlz

feature_data_id_string=Hotspots

Citation

- Whittaker, J., Afonso, J., Masterton, S., Müller, R., Wessel, P., Williams, S., and Seton, M., 2015, Long-term interaction between mid-ocean ridges and mantle plumes: Nature Geoscience, v. 8, no. 6, p. 479-483, doi:10.1038/ngeo2437.

Parameters

feature_data_id_string:str, default=None- A string to identify which feature data to download to the cache (see list of supported feature data above).

Returns

feature_data_filenames:instanceof<pygplates.FeatureCollection>,orlistofinstance <pygplates.FeatureCollection>- If a single set of feature data is downloaded, a single pyGPlates

FeatureCollectionobject is returned. Otherwise, a list containing multiple pyGPlatesFeatureCollectionobjects is returned (like forSeafloorFabric). In the latter case, feature reconstruction and plotting may have to be done iteratively.

Raises

ValueError- If a

feature_data_id_stringis not provided.

Examples

For examples of plotting data downloaded with

get_feature_data, see GPlately's sample notebook 05 - Working With Feature Geometries here. -

def get_plate_reconstruction_files(self)-

Downloads and constructs a

rotation model, a set oftopology featuresand and a set ofstatic polygons. These objects can then be used to createPlateReconstructionobject.Returns

rotation_model:instanceof<pygplates.RotationModel>- A rotation model to query equivalent and/or relative topological plate rotations from a time in the past relative to another time in the past or to present day.

topology_features:instanceof<pygplates.FeatureCollection>- Point, polyline and/or polygon feature data that are reconstructable through geological time.

static_polygons:instanceof<pygplates.FeatureCollection>- Present-day polygons whose shapes do not change through geological time. They are used to cookie-cut dynamic polygons into identifiable topological plates (assigned an ID) according to their present-day locations.

Notes

The get_plate_reconstruction_files() method downloads reconstruction files from a given plate model. For example,

gDownload = gplately.download.DataServer("Muller2019") rotation_model, topology_features, static_polygons = gDownload.get_plate_reconstruction_files()The code above downloads

rotation model,topology featuresandstatic polygonsfiles from the Müller et al. (2019) plate reconstruction model. These files can then be used to createPlateReconstructionobject.model = gplately.reconstruction.PlateReconstruction(rotation_model, topology_features, static_polygons)If the requested plate model does not have certain file(s), a warning message will alert user of the missing file(s).

def get_raster(self, raster_id_string=None)-

Downloads assorted raster data that are not associated with the plate reconstruction models supported by GPlately's

DataServer. Stores rasters in the "gplately" cache.Currently,

DataServersupports the following rasters and images:- ETOPO1:

- Filetypes available : TIF, netCDF (GRD)

raster_id_string="ETOPO1_grd","ETOPO1_tif"(depending on the requested format)- A 1-arc minute global relief model combining lang topography and ocean bathymetry.

- Citation: doi:10.7289/V5C8276M

Parameters

raster_id_string:str, default=None- A string to identify which raster to download.

Returns

a Raster object-

A gplately.Raster object containing the raster data. The gridded data can be extracted into a numpy ndarray or MaskedArray by appending

.datato the variable assigned toget_raster().For example:

gdownload = gplately.DataServer("Muller2019") graster = gdownload.get_raster(raster_id_string, verbose) graster_data = graster.datawhere

graster_datais a numpy ndarray. This array can be visualised usingmatplotlib.pyplot.imshowon acartopy.mpl.GeoAxisGeoAxesSubplot (see example below).

Raises

ValueError-

- if a

raster_id_stringis not supplied.

- if a

Notes

Rasters obtained by this method are (so far) only reconstructed to present-day.

Examples

To download ETOPO1 and plot it on a Mollweide projection:

import gplately import numpy as np import matplotlib.pyplot as plt import cartopy.crs as ccrs gdownload = gplately.DataServer("Muller2019") etopo1 = gdownload.get_raster("ETOPO1_tif") fig = plt.figure(figsize=(18,14), dpi=300) ax = fig.add_subplot(111, projection=ccrs.Mollweide(central_longitude = -150)) ax2.imshow(etopo1, extent=[-180,180,-90,90], transform=ccrs.PlateCarree()) - ETOPO1:

def get_spreading_rate_grid(self, times)-

Downloads seafloor spreading rate grids from the plate reconstruction model (

file_collection) passed into theDataServerobject. Stores grids in the "gplately" cache.Currently,

DataServersupports spreading rate grids from the following plate models:-

Clennett et al. 2020

file_collection=Clennett2020- Time range: 0-250 Ma

- Seafloor spreading rate grids in netCDF format.

Parameters

time:int,orlistofint, default=None- Request a spreading grid from one (an integer) or multiple reconstruction times (a list of integers).

Returns

a Raster object-

A gplately.Raster object containing the spreading rate grid. The spreading rate grid data can be extracted into a numpy ndarray or MaskedArray by appending

.datato the variable assigned toget_spreading_rate_grid().For example:

gdownload = gplately.DataServer("Clennett2020") graster = gdownload.get_spreading_rate_grid(time=100) graster_data = graster.datawhere

graster_datais a numpy ndarray.

Raises

ValueError- If

time(a single integer, or a list of integers representing reconstruction times to extract the spreading rate grids from) is not passed.

Notes

The first time that

get_spreading_rate_gridis called for a specific time(s), the spreading rate grid(s) will be downloaded into the GPlately cache once. Upon successive calls ofget_spreading_rate_gridfor the same reconstruction time(s), the grids will not be re-downloaded; rather, they are re-accessed from the same cache location provided they have not been moved or deleted.Examples

if the

DataServerobject was called with theClennett2020file_collectionstring:gDownload = gplately.download.DataServer("Clennett2020")get_spreading_rate_gridwill download seafloor spreading rate grids from the Clennett et al. (2020) plate reconstruction model for the geological time(s) requested in thetimeparameter. When found, these spreading rate grids are returned as masked arrays.For example, to download Clennett et al. (2020) seafloor spreading rate grids for 0Ma, 1Ma and 100 Ma as MaskedArray objects:

spreading_rate_grids = gDownload.get_spreading_rate_grid([0, 1, 100]) -

def get_topology_geometries(self)-

Uses the plate-model-manager to download coastline, continent and COB (continent-ocean boundary) Shapely geometries from the requested plate model. These are needed to call the

PlotTopologiesobject and visualise topological plates through time.Parameters

verbose:bool, defaultTrue- Toggle print messages regarding server/internet connection status, file availability etc.

Returns

coastlines:instanceof<pygplates.FeatureCollection>- Present-day global coastline Shapely polylines cookie-cut using static polygons. Ready for reconstruction to a particular geological time and for plotting.

continents:instanceof<pygplates.FeatureCollection>- Cookie-cutting Shapely polygons for non-oceanic regions (continents, inta-oceanic arcs, etc.) ready for reconstruction to a particular geological time and for plotting.

COBs:instanceof<pygplates.FeatureCollection>- Shapely polylines resolved from .shp and/or .gpml topology files that represent the locations of the boundaries between oceanic and continental crust. Ready for reconstruction to a particular geological time and for plotting.

Notes

This method accesses the plate reconstruction model ascribed to the

file_collectionstring passed into theDataServerobject. For example, if the object was called with"Muller2019":gDownload = gplately.download.DataServer("Muller2019") coastlines, continents, COBs = gDownload.get_topology_geometries()the method will attempt to download

coastlines,continentsandCOBsfrom the Müller et al. (2019) plate reconstruction model. If found, these files are returned as individual pyGPlates Feature Collections. They can be passed into:gPlot = gplately.plot.PlotTopologies(gplately.reconstruction.PlateReconstruction, time, continents, coastlines, COBs)to reconstruct features to a certain geological time. The

PlotTopologiesobject provides simple methods to plot these geometries along with trenches, ridges and transforms (see documentation for more info). Note that thePlateReconstructionobject is a parameter.- Note: If the requested plate model does not have a certain geometry, a

message will be printed to alert the user. For example, if

get_topology_geometries()is used with the"Matthews2016"plate model, the workflow will print the following message:No continent-ocean boundaries in Matthews2016.

def get_valid_times(self)-

Returns a tuple of the valid plate model time range, (max_time, min_time).

class PlateReconstruction (rotation_model, topology_features=None, static_polygons=None, anchor_plate_id=None, plate_model_name: str = 'Nemo')-

The

PlateReconstructionclass contains methods to reconstruct topology features to specific geological times given arotation_model, a set oftopology_featuresand a set ofstatic_polygons. Topological plate velocity data at specific geological times can also be calculated from these reconstructed features.Attributes

rotation_model:pygplates.RotationModel- A rotation model to query equivalent and/or relative topological plate rotations from a time in the past relative to another time in the past or to present day.

topology_features:pygplates.FeatureCollection, defaultNone- Topological features like trenches, ridges and transforms.

static_polygons:pygplates.FeatureCollection, defaultNone- Present-day polygons whose shapes do not change through geological time when reconstructed.

anchor_plate_id:int- Anchor plate ID for reconstruction.

Parameters

rotation_model:str/os.PathLike,orinstanceof<pygplates.FeatureCollection>,or<pygplates.Feature>,orsequenceof<pygplates.Feature>,orinstanceof<pygplates.RotationModel>- A rotation model to query equivalent and/or relative topological plate rotations from a time in the past relative to another time in the past or to present day. Can be provided as a rotation filename, or rotation feature collection, or rotation feature, or sequence of rotation features, or a sequence (eg, a list or tuple) of any combination of those four types.

topology_features:str/os.PathLike,ora sequence (eg,listortuple)ofinstancesof<pygplates.Feature>,ora single instanceof<pygplates.Feature>,oran instanceof<pygplates.FeatureCollection>, defaultNone- Reconstructable topological features like trenches, ridges and transforms. Can be provided as an optional topology-feature filename, or sequence of features, or a single feature.

static_polygons:str/os.PathLike,orinstanceof<pygplates.Feature>,orsequenceof<pygplates.Feature>,or an instanceof<pygplates.FeatureCollection>, defaultNone- Present-day polygons whose shapes do not change through geological time. They are used to cookie-cut dynamic polygons into identifiable topological plates (assigned an ID) according to their present-day locations. Can be provided as a static polygon feature collection, or optional filename, or a single feature, or a sequence of features.

anchor_plate_id:int, optional- Default anchor plate ID for reconstruction.

If not specified then uses the default anchor plate of

rotation_modelif it's apygplates.RotationModel(otherwise uses zero).

Expand source code